Need of a third-party SQL client editor

In the last few months, I have been working on the deployment of an Apache Druid cluster for the first time in a production environment. In this blog story, I want to share a way to create a DataGrip Driver that is able to connect to a Druid cluster and perform SQL queries.

Querying Druid using a third-party client application became necessary as more and more of my colleagues needed to get access to our Druid data sources during the development stages. While moving to production would make things even worse to manage the users and finally take the non-admins out of the Druid Router’s UI.

While searching for a solution on the web, I couldn’t find a convenient one. At first, I thought that using Apache Superset’s SQL lab backed by pydruid Python library could do the job for me, but it was clearly an overkill as I didn’t intend to use any other of Superset’s features.

My team had already used Apache Calcite Avatica’s JDBC driver to connect Tableau to Apache Druid with success. I regularly use DataGrip to query different database systems as it has support for a vast amount of them. So I looked into the custom Drivers that DataGrip offers, and by adding the Avatica connector jar along with some others, I got a working driver. It was the best solution as it was easy to communicate with other team members and didn’t make us use a new tool and spend even more time getting accustomed to it.

Enough with the back story, let’s open DataGrip to create a Driver and a Data source to query Druid.

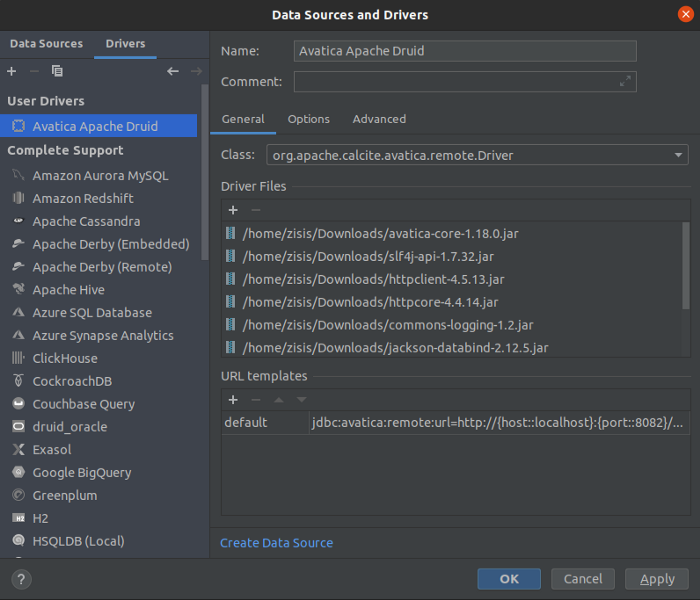

Creating the custom Driver

- Navigate to File > New > Driver, to open Data Sources and Drivers window.

- In the new window, change Name field of the driver to Avatica Apache Druid (or anything else you want to recognize it).

- Go to Driver Files hit+ button, and upload all the jar files, which can be found in the jars folder of my Github repo.

- Select org.apache.calcite.avatica.remote.Driver in the Classdrop down menu.

- Add a URL template, name it default and set value to:

jdbc:avatica:remote:url=http://{host::localhost}:{port::8082}/druid/v2/sql/avatica/

- Go to tab Options, and there in the Other section, select Generic SQL from the drop-down menu.

- Apply to save Driver.

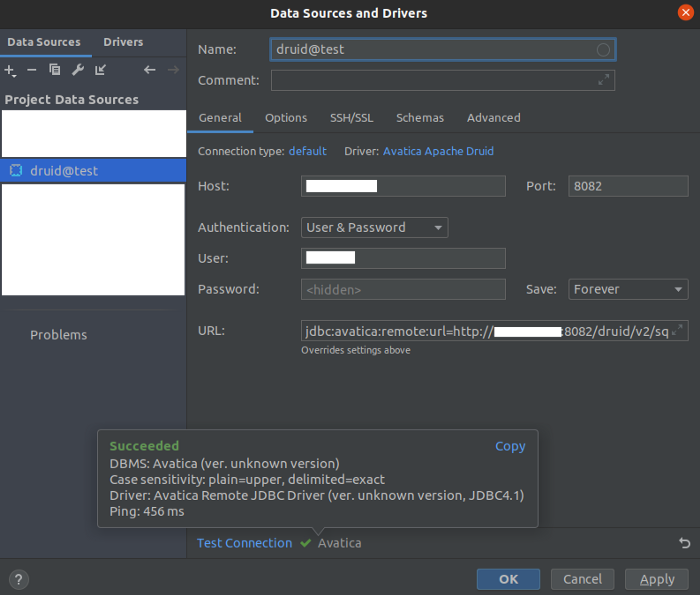

Creating a new data source using the custom Driver

- Navigate to File > New > Data Source > {Druid Driver name}

- Change host and port if needed (default port is set to 8082 because it is the default plain text port of Broker node)

- Test connection

- Apply to save Data source

I haven’t yet tried to connect to a Broker node using TLS but I believe it won’t be a problem as it is possible while using the Avatica driver with Tableau.

Limitations

- The main drawback of this workaround is that using Generic SQL dialect autocomplete is not available, and keyword highlighting is limited to classic functions such as count. Currently, there is an open issue on the ability to add custom dialects in DataGrip.

- The absence of auto-limit option could be disastrous, leading to querying heavy loads of data and even render the Historical node unavailable.